Visual: Undark; Base imagery via Getty, Unsplash

On a sweltering day in July 2023, a ragtag group of data wonks sat around a table at U Zlatého Tygra, or the Golden Tiger, a historic bar in Prague’s Old Town. A mild sense of outrage hung in the air between jokes about who among them looked the most Medieval. The group was discussing the issue of manipulated images and fabricated data in scientific publishing. Soon someone was passing around a phone showing a black-and-white image with clear traces of tampering. After a couple more rounds, the group made its way across the ornate cobblestone roads. They brimmed with frustration that, until now, had largely been shared only online. “It’s a toxic dump,” an Italian scientist known to the group by his pseudonym, Aneurus Inconstans, said about science. “It’s not about curiosity anymore, it’s just a career.”

These are the sleuths, as the media often refer to them. They are a haphazard collection of international acquaintances, some scientists and some not, from the United States, Ukraine, New Zealand, the United Kingdom, and elsewhere, who are dedicated to uncovering potential manipulation in the scientific literature.

Those present in Prague, and others who couldn’t make the trip, have different strengths and interests. Some are into exposing statistical skullduggery; others are into spotting manipulated images. Some are academics sticking to their field; others more general-interest vigilantes. But all of them are entrenched in an ongoing battle at the heart of science, in which the pressure to publish and the drive for fame and profit have thrown countless images and statistics into question, sometimes cracking pillars of research in the process. The issue is spread across many fields of science, though some have suffered more than others. “The biggest paper in Alzheimer’s disease is a fake,” Inconstans said en route from the bar as the sun set over Old Town. He was sharing an opinion that all the other sleuths had already heard.

The paper in question, published in Nature in 2006, lent credence to a theory that the proliferation of a type of amyloid protein causes Alzheimer’s disease. The amyloid cascade hypothesis, which had all but taken over Alzheimer’s research, has led to billions of dollars going into researching anti-amyloid therapies to slow the disease’s progression.

But a decade and a half later, some sleuths noticed problems with crucial images in that paper. Core data appeared to be fake. Scientists in the field debated about the importance of the paper after the concerns emerged. Some believed that a landmark finding supporting the hypothesis was now unreliable. Others insisted the paper had never been used as proof.

No one could deny, though, that thousands of other publications had cited the research. And, in the sleuths’ eyes, the paper’s popularity made investigating any tampering that much more important. “Part of what we need to do, especially when you’re looking at papers that have been cited, many of them hundreds, and a few of them, thousands, of times, is to make it easier for people to recognize the problems,” said Matthew Schrag, a neurologist who treats Alzheimer’s patients and conducts research on the disease at Vanderbilt University. A correction or a retraction would have served that need and kept with the protocols of science.

Instead, the questions raised about the work revealed cracks that have been slowly eating away at scientific integrity for years. And the sleuths asking those questions would find themselves not just seeking a minor correction but fighting for a greater truth. In a text message to Undark, one man who long went by the pseudonym Smut Clyde before outing himself as David Bimler, a retired perceptual psychologist from New Zealand, wrote: “ We are all idealists and united by a wish for the ideals of science to be more like what they used to be.”

Whether they can do anything about it, though, is another question.

##

The Prague group had come together at the invitation of Kevin Patrick, a financial adviser in the Seattle area. Unlike the other guests at the Golden Tiger — which, in addition to Bimler and Inconstans, included Elisabeth Bik, well-known for her skill at identifying tampered images, and several others — Patrick didn’t have a scientific background. Though his investing work occasionally required him to pay attention to drug stocks, he says general curiosity years later led him to discover Retraction Watch, a website for tracking problematic and unreliable papers. As he recalls, Retraction Watch soon led him to PubPeer, a site for discussing published research, where he saw example after example of tampered images.

Following some sleuths’ posts on X, then known as Twitter, got Patrick wondering if he could spot the patterns that people like Bik are so adept at finding. Patrick trained himself and practiced by asking other sleuths on X to weigh in on his suspicions. “I do have a lot of down time,” he said. Eventually he started posting on PubPeer under a pseudonym assigned by the website, partly to protect his finance work and partly because he thought that authors would not take his comments seriously if they knew he was not a scientist. (On X, he uses the name Cheshire.)

One day in 2021, Patrick noticed that a paper he’d called out on PubPeer for having problematic images a couple years prior had been retracted. It was exciting to be part of the sleuthing world. He started looking at more papers, and, because that retracted paper was Alzheimer’s research, he continued in that vein. Soon he was looking at images showing bands of color — some in grayscale, some in fluorescent green or pink — representing the proteins present in samples. Patrick thought the background looked artificially altered. He saw that some sleuths had spotted similar issues in other papers by the same author. One of them was the 2006 paper.

Patrick rarely understands the science he’s looking at. “I’m not even that interested,” he said. But he enjoyed developing his knack for noticing visual patterns. Divorced and empty-nested, he also liked the online camaraderie. For years, Cheshire, Smut Clyde, Inconstans, and others occupied a fringe corner of the internet that remained frustratingly under the radar considering the amount of questionable images they were routinely uncovering, most often in the biosciences. (In cases where the sleuths haven’t gone public, Undark is using their pseudonyms, rather than their real names, in keeping with how they are known in the scientific community.) The sleuths bonded over their discoveries, their commitment to scientific accuracy, and, for some, the pushback they received from authors and sometimes journal editors who did not always welcome questions raised about their work.

The problematic 2006 Nature paper was a particularly high stakes finding. Spotted at a time of reckoning for Alzheimer’s research — a 2019 exposé by late STAT journalist Sharon Begley chronicled the disproportionate attention given to research directed by the amyloid cascade hypothesis and lent additional weight to a quietly growing belief that the hypothesis was either incomplete or simply wrong — the problematic images would further erode the foundation on which decades of work, costing billions of dollars, had been based.

That’s largely because Patrick wasn’t the only person to notice the issue.

##

Alzheimer’s disease was first reported in 1906. A German psychiatrist named Alois Alzheimer watched a patient progress from paranoid to aggressive to confused and, upon her death, found strange plaques and tangles in her brain. More cases followed and Alzheimer’s disease became an official diagnosis in 1910. It is the most common form of dementia, an umbrella term for a variety of cognitive impairments for which age is a primary risk factor.

In 1984, two scientists discovered that a protein called amyloid

beta, or Aβ, was the main component of amyloid plaques. This discovery

led to the notion that amyloid beta is behind the formation of tangles

that kill neurons, and are the hallmark of Alzheimer’s disease. For many

researchers, the amyloid cascade hypothesis, or ACH, brought about a

major sense of optimism for helping to solve the mystery of Alzheimer’s

disease and carved a potential path to treatment: Stop amyloid beta and

thus stop the decline, maybe even reverse it.

The theory tantalized industry and researchers alike. A biotechnology

company named Athena Neurosciences and pharmaceutical giant Eli Lilly

and Company produced mice containing a mutated version of the human gene

for amyloid; their brains became filled with plaque and the animals

lost their memory capacity. A breathless report in The New York Times

said the tiny Athena could become “the mouse that roared,”

for this landmark achievement, which was potentially worth a billion

dollars a year if it led to an effective Alzheimer’s drug. Soon,

scientists, pharmaceutical companies and grant funders went all in on

the ACH.

Not everyone fell in line. Matthew Schrag, the Vanderbilt neurologist, was among the skeptics. Schrag, who holds both a medical degree and a Ph.D., had joined Vanderbilt’s Memory and Alzheimer’s Center and launched his own research lab in the mid-2010s. His training included a stint in the Department of Cell Biology at Yale. There, Schrag was captivated by the rigorous adherence to the scientific method.

Nonconformity, too, was in Schrag’s blood. His grandfather was an atheist whose father had left the Mennonite Church. Schrag’s parents had home-schooled him and his siblings in a small town in Washington. As his career progressed, his commitment to careful science brought him to his own rebellious stance: The ACH was wrong.

When the 2006 Nature paper came under wider scrutiny in 2022, Schrag already believed the evidence against the ACH was far more persuasive than anything in its favor. For one thing, the plaques considered to be a hallmark of the disease also occur in people without it. Also, research had not shown that a build-up of amyloid was a necessary precursor to memory impairment in humans.

Moreover, the decades of research dominated by the ACH had failed to reverse, stop, or even meaningfully slow the progress of the disease. Not a single clinical trial confirmed that targeting amyloid beta could stop or even dramatically slow the progress of the disease. Nonetheless, pharmaceutical companies forged on to move new candidates forward, but nothing panned out.

Not everyone agreed that these facts disproved the causative role of amyloid beta. What Schrag saw as disproof others saw as the necessary and incremental process of understanding the pathology of Alzheimer’s disease. This was simply science at work.

The refusal of the field at large to concede the wrongness of the amyloid cascade hypothesis deepened Schrag’s concerns about an underlying problem. A suspicion that Schrag had long been harboring took root: Alzheimer’s treatment wasn’t stuck because of bad drugs. It was stuck because of bad science.

In 2021, the U.S. Food and Drug Administration approved an Aβ-targeted drug called aducanumab in a controversial decision that prompted the resignation of three scientists involved with the review process. “This might be the worst approval decision that the FDA has made that I can remember,” Harvard Medical School professor Aaron Kesselheim, one of the resigning researchers, told The New York Times.

Biogen, the drug maker, had stopped clinical trials early because it

had failed to show a benefit. But the investigators then analyzed a

larger dataset to show a positive impact among patients who received

higher doses. The FDA advisory committee strongly recommended against

approval of the drug, but the agency went against that advice, granting

the drug accelerated approval based on the fact that aducanumab destroys

amyloid plaques — not because it slows the disease. Billy Dunn, head of

the FDA’s Office of Neuroscience at the time, left in 2023 and joined

the board of Prothena, a drug company with several Alzheimer’s drugs in

the pipeline. (Dunn did not respond to a request for comment forwarded

from Undark by Prothena’s senior director of corporate communications

Michael Bachner.) Researchers like Schrag feared the approval would keep

the focus on what he saw to be a dead-end hypothesis.

He spoke up. “We had been hoping for a recalibration of the field,” he told The Washington Post in 2021, following the approval. “The proof of efficacy just isn’t there,” he told National Geographic.

Statements like these put Schrag on the radar of two scientists, one a neuroscientist and biotechnology entrepreneur, the other a cardiologist in academia, who suspected foul play in the development of another experimental Alzheimer’s drug called simufilam, made by the pharmaceutical company Cassava Sciences. Jordan Thomas, an attorney known for his whistleblowing lawsuits, asked Schrag to review the published studies for a consulting fee of $18,000. His investigation found that several key images appeared to have been manipulated to show a benefit. He also flagged that the researchers claimed simufilam altered human brain tissue that had been previously frozen, which seemed extremely hard to believe. (In an email, Craig Boerner, media director at Vanderbilt University Medical Center, noted that Schrag’s investigative work is independent of his employment at Vanderbilt University Medical Center.)

These discoveries rattled Schrag. The major problems he saw led him to doubt on the authors’ other studies, many of which involved patients. “I felt that academically I couldn’t let that stand,” he said. (In a statement posted on its website in August 2021, Cassava asserted that allegations made against the company regarding data and image manipulation were “false and misleading.”)

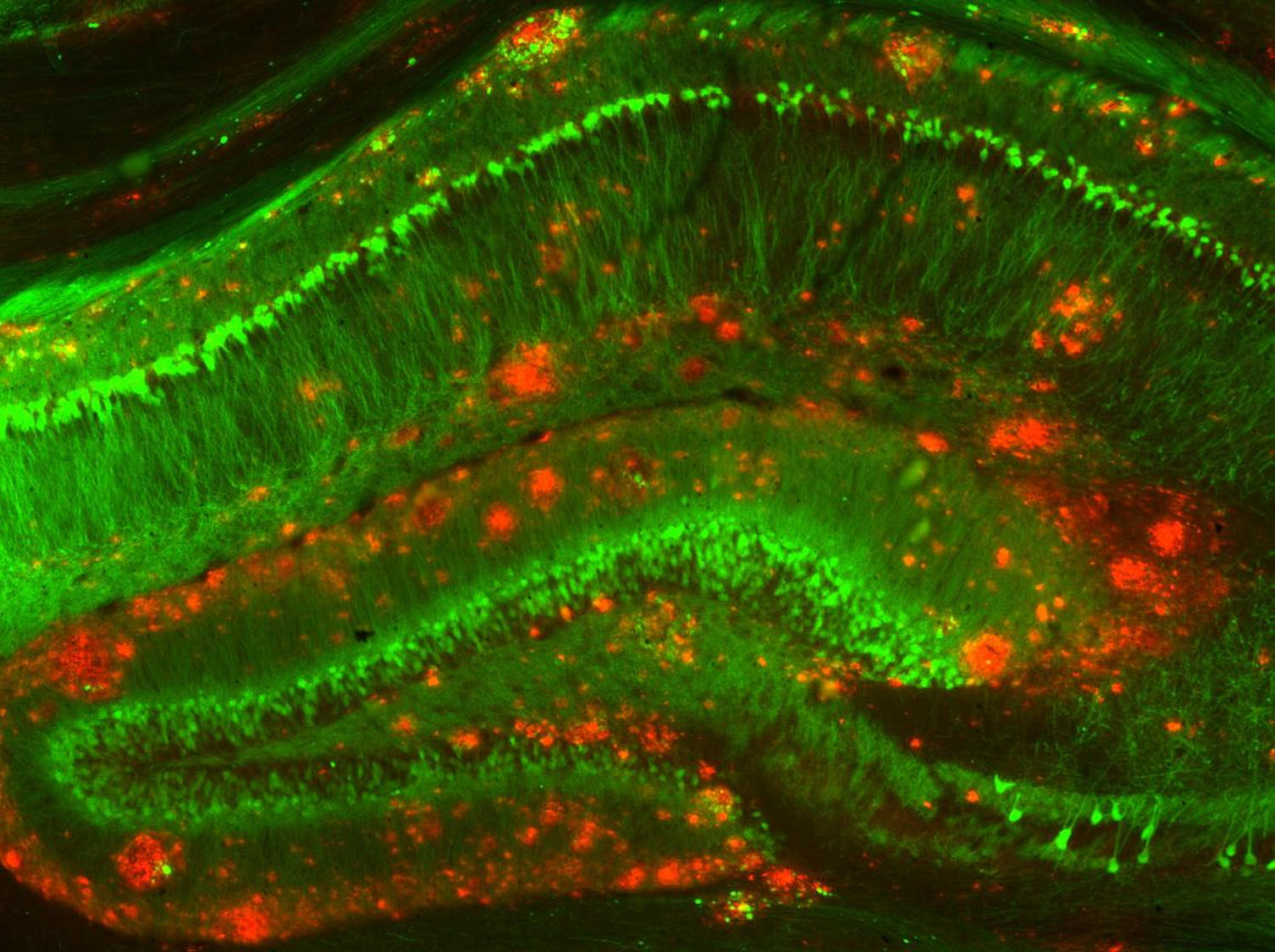

When it came to assessing the integrity of a study, Schrag was particularly focused on the images. The editing techniques used to place politicians in places where they aren’t and to make celebrities look perfect on magazine covers are also ideal for scientists who need to show results where none exist. Sections of a microscopic image can be copied and pasted elsewhere in the frame. Dimming and brightening in grayscale can eliminate errant data from Western blots, a basic lab technique to separate and identify the proteins present in cell or tissue samples that ultimately results in an image in which the protein contents appear as rows of thick black dashes. Images of neurons can be copied, rotated, and placed nearby, creating a fake result. Photoshop and other tools can make a drug look like it’s hitting a target, or a protein appear important to a disease. It can make a failed study look successful.

But going any further with his forensic investigations would require a

reliable method for analyzing images. Schrag needed to find some

figures that other sleuths had already flagged for manipulation to

validate his approach. So, in 2022, he turned to PubPeer and searched

for Alzheimer’s studies that had the kind of images he was trying to

tackle. The probe returned a few papers, which in turn led him to the

2006 Nature publication.

It reported on a version of amyloid beta called Aβ*56, asserting that mice with excessive amounts of this protein became unable to recall information about their surroundings that they previously knew — not unlike a person with dementia who can no longer find their way home from the supermarket. The study was led by a postdoctoral neuroscientist at the University of Minnesota named Sylvain Lesné, who was working in well-known researcher Karen Ashe’s lab. The results of their experiment had been celebrated as evidence for the amyloid cascade hypothesis. Here, the believers said, was support for the theory that excess amyloid leads to cognitive impairment.

Scientists track how many times a paper is cited by others because it’s a rough indication that the finding was noteworthy. The Lesné paper was cited more than 2,300 times. And the images were the crux of the results. They revealed the presence of Aβ*56 in the impaired mice. The Western blots and other figures were the evidence.

Schrag told the federal government about his concern — after all, the work had been supported by the National Institutes of Health — and he also told Nature. Then he told Science magazine, which asked a few experts in image analysis if they agreed with Schrag’s conclusions. They did. In July 2022, the magazine published a story by Charles Piller on both the simufilam research and Lesné’s paper. Schrag’s appearance in Science was how Patrick first came to interact with him, a connection that bridged the fringe world of the sleuths with the more accepted world of academia. Their mutual commitment to scientific integrity became a joint mission. “We’re not cranks,” Schrag said. “We’re not people hiding in a basement somewhere and just creating trouble on the internet.”

The exposé was widely covered in the media, but action to correct the scientific record would not come swiftly.

##

Scientific fraud has existed for as long as people have stood to benefit from it. In the early 1980s, Harvard Medical School heart researcher John Darsee faked data in animal research on heart attack treatments. Beginning in the early 1990s, Japanese researcher Yoshitaka Fujii, an anesthesiologist, fabricated more than 170 papers. And Massachusetts anesthesiologist Scott Reuben fabricated data in at least 21 studies dating back to the 1990s, several of which highlighted the benefits of pain medications made by Pfizer, which had supported much of his research.

In 2016, Bik, the well-known image sleuth, who holds a Ph.D. in microbiology, co-authored a study showing that nearly 800 of 20,621 papers she and her colleagues examined contained problematic figures. Most of these did not seem like not innocent errors; a 2012 study found that two thirds of all retractions are due to misconduct, not mistakes.

To the sleuths who congregate on PubPeer and on X — Bik, Patrick, and many others, sometimes including Schrag — this fakery is emblematic of how science has departed from its original purpose of understanding the natural world. Academic research requires funding and funders want proof that they are making a wise investment. Young investigators have to produce results to win grants and job offers. Senior faculty need to preserve their labs and reputations.

Jana Christopher, an image analyst for the Federation of European Biochemical Societies, which publishes several journals, says she has seen the effect of the pressure to publish on scientists. “People are going to try and be fast,” she said. “People are maybe being bullied in their work environment into working faster, coming up with desired results in a certain timeframe. I think in some labs, the atmosphere can be quite toxic and people are not necessarily protected from that in the right way.”

Many people concerned about scientific integrity see the big egos of star scientists as a contributing factor. Bik called out the work of French physician and microbiologist Didier Raoult after noticing he was listed as the senior author on a paper stating that hydroxychloroquine — a drug prescribed for autoimmune conditions like lupus and rheumatoid arthritis — could be used as a treatment for Covid-19. Raoult and Bik subsequently traded barbs on social media and elsewhere over her criticism of this work, and of Raoult’s wider publication record. (In response to a request to Raoult for comment, his fellow researcher and frequent co-author, Philippe Brouqui, forwarded a letter that he said had also been sent to Science magazine, in which Raoult defended himself against critiques by Bik and others.)

Schrag places much of the blame on the scientific publishing industry. Journals are self-managed, and little oversight exists to address suspected misconduct. And when someone catches problems with published data, the journals are not responsible for identifying whether they stemmed from deliberate misconduct. Meanwhile, the publications’ profits have come under increasing scrutiny. Nature charges around $11,400 to publish a study as open access, even though, by one estimate, it costs at most around $1,000 to move a study from submission to publication. Elsevier, which owns 2,900 journals, reported more than $3.5 billion in revenue for 2022. As Schrag and others see it, the profit coupled with the lack of regulation creates an imbalance that favors abundant publishing but not the time-consuming process of reviewing potential errors.

In an email to Undark that Katie Baker, communications director for publishing and research services at Springer Nature, asked be attributed to a spokesperson, the publisher noted that the article processing charge “reflects the costs of publishing a paper in Nature or a Nature research journal.” Simply, the review and editorial process involved in steering papers from submission to publication requires time and expertise that add up to a significant expense.

The fraught dynamics don’t end here. Boris Barbour, a neuroscience researcher and organizer of PubPeer and Adam Marcus, a co-founder of Retraction Watch, both noted that universities often seem reluctant to investigate allegations of misconduct. Universities are charged with investigating any allegations of misconduct made against the researchers they employ. The Office of Research Integrity, the U.S. agency that oversees much federally funded science, has proposed new rules aimed at giving ORI more insight into the process and findings of campus investigations. But identifying a researcher engaged in misconduct could risk losing federal funding, as well as tarnishing the institution’s reputation.

Even when journals and academic institutions are diligent about uncovering bad practices, the investigation can be stonewalled by authors, say journal editors. Sarah Jackson, executive editor at the Journal of Clinical Investigation, said sometimes authors fail to provide the necessary source material to confirm the published data, or become unreachable. “I still see every range of response from senior investigators,” she said.

When the City University of New York investigated the work of neuroscientist Hoau-Yan Wang, who collaborated with Cassava on the research of simufilam, the report produced stated that Wang failed to provide a single piece of original material, responding that crucial records had been thrown away during a Covid-19 cleaning frenzy.

Many journals have taken steps to cope with image tampering, but not enough, it seems, to staunch the problem. “And these days,” says Barbour, “the slight improvements we’re seeing from the journals are exposing how bad institutions are.”

The consequences for speaking up are worrisome, says Barbour. Young scientists who spot something awry risk ending their careers for calling out the boss. A lawyer for Raoult accused Bik of harassment and blackmail after she flagged issues with many of his papers. In April 2021, one of Raoult’s colleagues also posted a screenshot on X that revealed Bik’s home address, as she and Barbour were listed as the subjects of a legal complaint. Likewise, Purdue University biologist David Sanders went public with allegations of potential image manipulation in some articles by famed cancer researcher Carlo Croce. In response, Croce sued Sanders and The New York Times for defamation, and the Board of Trustees at The Ohio State University, his employer, for inappropriately removing him from an appointment as department chair. (Croce lost the cases against Sanders and The New York Times, and last year, a court ordered a local sheriff to seize and sell Croce’s property, to pay more than a million dollars in outstanding legal fees. Croce won an appeal on the initial ruling against him in the third case, which is currently still open.) “In general, the whole environment is incredibly structurally hostile to criticizing work,” said Barbour.

For the crowd at the Golden Tiger and Schrag, the only way to restore

integrity to research is by drawing attention to the problems, no

matter the cost, no matter who listens, and no matter who they upset.

##

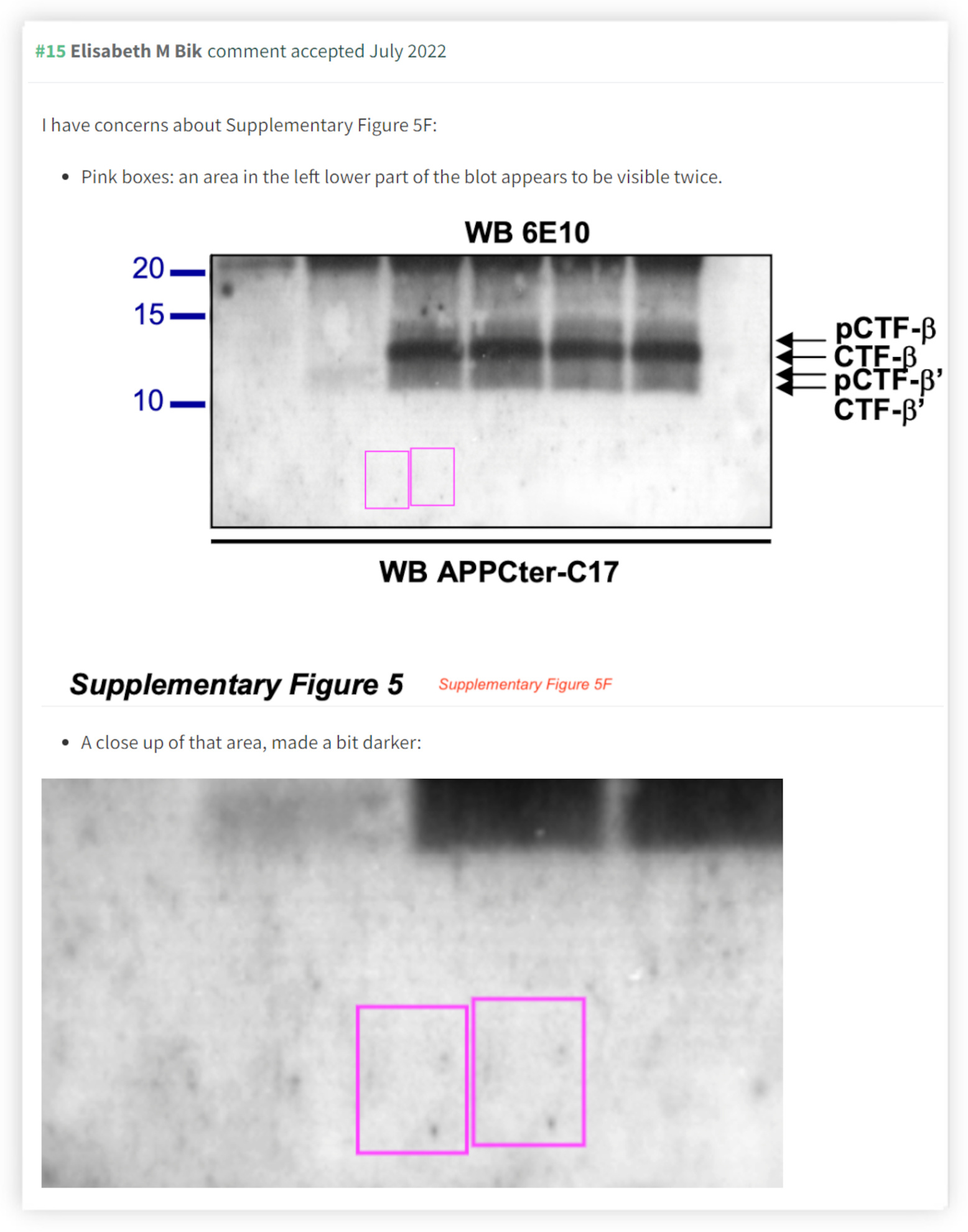

The first PubPeer comments on Lesné’s Alzheimer’s paper appeared in January 2022, six months before Science reported Schrag’s findings of image manipulation. “I have concerns about supplementary figure 4,” a comment said in the neutral language enforced on the site. The figure showed a Western blot. Several such images in the paper showed dark bands affirming the presence of Aβ*56 in Alzheimer’s-like mice. Readers of the study saw the images as further support for the ACH when it was first published.

Now, 16 years after the paper was published, another comment — Schrag says all of those that appeared that January were posted by him — flagged that several bands appeared to have been copied and pasted. More PubPeer comments flowed in. One flagged splice marks in figure 1, also a Western blot, signaling that the image appeared to have been cut to add or remove rows of bands. Other comments pointed out issues with additional figures.

In June 2022, Karen Ashe, Lesné’s supervisor and the senior author on the paper, responded to some of the PubPeer comments. Ashe was, and remains, a highly regarded neuroscientist. In 1996, she pioneered research to genetically engineer mice that overproduced amyloid beta and developed dementia-like symptoms. She is recognized as a leading scholar in Alzheimer’s research. And though Lesné was listed as first author, she had overseen his research of Aβ*56 and was responsible for the validity of the paper.

PubPeer users noted Ashe’s willingness to answer questions about the paper. She provided several original images that had been submitted to Nature and said that the anomalies in the images were introduced during the publishing process, an explanation Bik found plausible for some figures since converting them to a publishable format requires digital processing that can lead to subtle but meaningful changes. But issues with other images remained.

A week before the Science story came out broadcasting the problems that Schrag and others had noticed, Nature attached a note to the online version saying that the journal had “been alerted to concerns regarding some of the figures” and was investigating them. Other questions on PubPeer flagged additional issues. Bik spotted what appeared to be outright duplications in one figure; a magnified view highlighted an area that seemed to have been copied and pasted, which would make the entire image untrustworthy.

PubPeer users have raised concerns about manipulated images on several of Lesné’s other papers, including one that, according to a comment from Bik, appeared to use a figure lifted straight from another paper. (Ashe declined to comment for this story. Lesné did not respond to Undark’s requests for comment.) Schrag hoped for a swift retraction. “It’s absolutely critical,” he said, emphasizing that “as a field, we need to take a stand for correcting clearly erroneous data.” The 2006 paper, Schrag believed, was undoubtedly wrong.

Staff charged with evaluating such complaints insist the slow pace is not their fault. Springer Nature, which publishes more than 3,000 journals, received about 1,600 such queries in 2022. Tim Kersjes, who leads the resolutions team within the publisher’s research integrity department, acknowledged that investigations can take a long time. “Ideally a concern comes in, we investigate it, and we can retract two weeks later,” he said. “But in practice that’s impossible.” Kersjes said that authors don’t always respond to emails or send explanations that require further review. Marcus noted that the peer review process isn’t geared toward catching images for signs of tampering or other types of misconduct. Jackson, at the Journal of Clinical Investigation, said that the fact that some scientists are willing to fake their data caught publishers off guard.

The growing cadre of sleuths see the matter differently. They are catching problems that, in some cases, they say should never should have made it past a first review. Jana Christopher quickly spotted issues when she reviewed the images Schrag had flagged in Lesné’s paper and the simufilam studies, though she emphasized that image-screening experts and automated tools were not widely available at the time the Lesné’s paper was published.

The sleuths and other science integrity watchdogs rankled the publishing industry initially. “We certainly had journals tell us basically, go fuck off, we don’t have to answer to you” said Marcus. “That doesn’t happen quite so much anymore.” Still, editors remain wary of PubPeer, which has increasingly become a forum for catching misdeeds rather than discussing new discoveries, which was its initial purpose.

“We’ve seen some folks that have taken it on as a personal mission, that they are on a crusade to root out fraud,” said Jackson. “Those individuals may feel like the journal is beholden to them personally and they don’t always respect that there has to be a process for investigating these things.” Universities may also have trepidation about engaging with individuals who call out their researchers on PubPeer or in direct correspondence to the institution. “Researchers are well aware that these types of allegations, whether substantiated or not, can be career-ending,” said Kate Gallin Heffernan, a lawyer who works with institutions on misconduct investigations. She says that even when a researcher provides a satisfactory response, an exchange on PubPeer or X can leave a stain.

Nevertheless, the scientific literature appears to be riddled with unreliable studies. A New Yorker profile of Bik noted that of the 782 papers she’d found to have problematic images in her 2016 analysis, at most 10 had been resolved by the authors disproving her concerns. An analysis by Nature found that a record 10,000 papers were retracted in 2023. PubPeer users uncovered possible issues with images in papers co-authored by former Stanford University president Marc Tessier-Lavigne that made headlines in 2023. (Though Tessier-Lavigne stepped down as president, an investigation commissioned by the university’s board of trustees exonerated him of research misconduct.)

A 2021 analysis of PubPeer comments found that two-thirds of the comments on the site center on image anomalies, and most of these studies pertain to health and medicine. In other words, they may directly affect people’s lives. Some journals have begun to use AI to scan submissions for image issues. Two entrepreneurs in Austria created ImageTwin for this purpose, and the U.S. federal government also provides resources for accessing and using image scanning software. But even Markus Zlabinger, who co-founded ImageTwin, emphasized that their software is a starting place that helps editors know when to take a closer look. A person must always make the final call, he said.

##

The sleuths vary in their approach toward the publishing industry. Some can appear aggressive. Leonid Schneider, whose website For Better Science,

has chronicled egregious issues — such as a paper mill that appears to

be behind hundreds of publications discovered by Bik, Smut Clyde, and

others — tends to make harsh accusations. Another sleuth, who uses the

pseudonym Claire Francis, has antagonized journals with frequent

messages demanding action. “You are complicit in fraud,” Claire Francis

told one staffer in correspondence about a corrected paper. Entire

pieces have been written

about how to handle anonymous whistleblowers like Claire Francis. Bik

takes a more neutral approach, preferring to work with publishers and

not as their adversary. She tags authors on social media only when they

are towering figures in their fields, preferring to protect vulnerable

young researchers from the mean-spirited rebukes that X tends to

attract.

Regardless of how each express it, though, many of the sleuths share the same outrage. They are frustrated and angry at the sheer amount of research misconduct and the extent to which researchers may get away with manipulation or faking data because journals and universities don’t take a stand. Others emphasize that tackling these issues is simply part of the job of a scientist.

In Science’s July 2022 report on the Lesné scandal, Schrag said he allowed the publication to use his identity because he believes science has to be candid and transparent. But that ideal doesn’t mean he’s escaped repercussions. He says he was subpoenaed to provide documents for an investor lawsuit against Cassava, which, by his count, resulted in tens of thousands of dollars in legal fees and required a significant investment of time. He says when Cassava also subpoenaed him for documents, a foundation called the Scientific Integrity Fund stepped in to help with his legal expenses. He had stood up for the cause of research integrity and fought a pharmaceutical Goliath, and now he was dealing with the aftermath. “You can lose even if you win, right?” he said.

The losses have been emotional too. His work outing dubious papers led to the identification of problematic images on his own CV. An early mentor, Othman Ghribi, allegedly manipulated images in a few published studies they’d co-authored. After hearing about the alleged manipulations and confirming them for himself, Schrag said he knew he had to alert the journals and ask for retractions. “I think there is a time to stand up and say ‘Yep, even though it’s somebody I really care about, we can’t have this in our business,’” he said.

Regardless of the debates over how important the Lesné study has been

to Alzheimer’s research, the tampered images and lack of closure to

Nature’s investigation became, to many, emblematic of just how damaged

science has become.

The matter finally came to a head in mid-2024. In May, Ashe posted a response to Schrag’s June 2023 analysis of a replication study she conducted. She acknowledged the “misrepresentation of data,” but also maintained her conviction that experiments she and colleagues had conducted and written up in a March 2024 paper had reproduced the main findings of the original work. A correction, rather than a retraction, would keep the scientific record accurate.

Schrag, though, maintained that the new results did not support the original conclusion. Bik also called for a retraction on the simple grounds that the paper contained tampered work and was therefore unreliable, regardless of what the replication experiment had found. Finally, Ashe informed PubPeer that the editors at Nature had not agreed to the correction and therefore she and all other authors except Lesné had decided to retract the 2006 paper.

On June 24, 2024, about two and a half years after the first concerns appeared on PubPeer, Nature retracted the study. Undark asked representatives at Nature about this time frame. In an email that Michael Stacey, head of communications for journals with Springer Nature Group asked to be attributed to a spokesperson, he wrote: “Our investigations follow an established process, which involves consultation with the authors and, where appropriate, seeking independent advice from peer reviewers and other external experts. Other factors, such as awaiting the outcome of institutional investigations, where appropriate, can also impact the length of time an investigation takes.” The University of Minnesota, meanwhile, concluded its own review of the paper with no findings of research misconduct.

##

Schrag believes that the amyloid cascade hypothesis would have faded from view long ago if science had more integrity. “I think it’s become much more of a political entity than a scientific one,” he said.

In January 2023, the FDA approved another amyloid-targeting drug, lecanemab, under its Accelerated Approval pathway. Study participants who took the drug for 18 months had moderately less cognitive decline compared to patients who took the placebo, according to the results published in Alzheimer’s Research & Therapy in late 2022.

But to Schrag, the entry of lecanemab onto the market underscored how dire it is that the field moves on from targeting amyloid beta, he said. The hypothesis, he said, is wrong. A growing number of researchers agree, though many — including Schrag — say that amyloid is probably one component of a disease with many contributing factors. In January 2024, Biogen announced that it would stop developing and marketing aducanumab, the controversial 2021 approval, to focus its resources on lecanemab. In July 2024, the FDA approved donanemab, which also targets amyloid. Multiple patients on both the lecanemab and donanemab clinical trials experienced brain swelling or bleeding. Three patients on the lecanemab trial died.

The sleuths have continued to flag issues with long upheld research. Toward the end of 2023, Schrag, Bik, Patrick, and Columbia University neuroscientist Mu Yang sent a lengthy dossier to the NIH detailing problems with the work of famed stroke researcher Berislav Zlokovic. Bik knows the complaint may not much yield much. After all, toward the end of 2023, CUNY halted its investigation of Hoau-Yan Wang, the Alzheimer’s researcher who led many studies of simufilam. (CUNY would not comment on the status of its investigation — noting only that Wang is on administrative leave — though a federal grand jury indicted him this summer on charges of fabricating and falsifying data in grant applications.) And regarding the University of Minnesota’s review of the 2006 Nature paper, a spokesperson told Undark that there were “no findings of research misconduct pertaining to these figures,” but could not share more detail due to state law.

Asked about the university’s conclusion, Bik was incredulous. “I don’t understand how you can come to that conclusion,” she said. “In my professional opinion and that of several others, these photos have been altered.” Bik added that the findings seemed to signal that the issues surrounding the now-retracted paper were not “a big deal.”

“But it was,” she said. “It was a big deal.”

Still, the sleuths are tenacious in their cause. After gathering in Porto, Portugal, in July 2024, they are now planning a third summer summit in Krakow, Poland.

##

UPDATE: This article has been updated to clarify the nature of allegations made by Purdue University biologist David Sanders regarding instances of potential image manipulation in some articles by cancer researcher Carlo Croce.

Jessica Wapner is a widely published journalist, writer, and producer. She is the author of “The Philadelphia Chromosome” and “Wall Disease,” and the reporter and co-host of the podcast One Click.

Jane Reza contributed reporting for this story.

____

This article was originally published at the UNDARK and is republished under the Creative Commons license.